Kubernetes: Installation

Intro

I’ve been working with Docker containers for a while, even my development environment has been dockerized, but apart from creating and running Docker images, I haven’t gone any further than that. Nowadays containers are everywhere, and there’re many products for provisioning and orchestrating containers. I’d say that the most important is Kubernetes.

Kubernetes is an open-source system for automating deployment, scaling, and management of containerized applications.

As a result I’ve created a

Vagrant

installation to ease the creation of a Kubernetes cluster with

flannel as a POD network and metallb as load balancer.

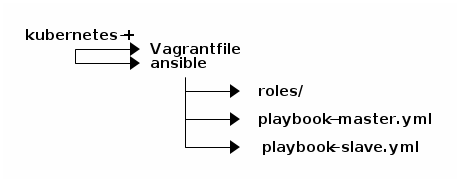

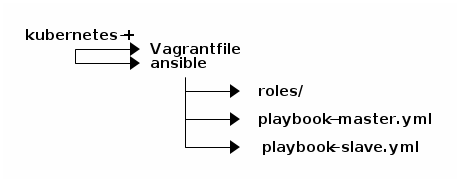

Structure

Before creating the cluster I need to create two virtual machines (master + slave) and install the required libraries in order Kubernetes to work. Because I’m using Vagrant and Ansible to create and provision master and slave virtual machines, it’s required to install both before going any further.

Vagrant images

| For more information about Vagrant go to https://www.vagrantup.com/ |

For this POC I’ve created a couple of VMs with Vagrant to mimic barebone machines.

###########################

###### UTIL METHODS #######

###########################

# Extracts the ip of a given node by its role name

def get_ip_by_role(nodes, role)

node = nodes.find { |name, ndata|

ndata[:role] == role

}

return node.last()[:ip]

end

# Extracts master node ip from the list of nodes

def get_master_ip(nodes)

return get_ip_by_role(nodes, "master")

end

# Extracts nfs server ip

def get_nfs_ip(nodes)

return get_ip_by_role(nodes, "nfs")

end

# Returns true if there is a node with :role => 'nfs'

def is_nfs_active(nodes)

is_there_nfs = nodes

.select { |name, ndata| ndata[:role] == 'nfs'}

.size() > 0

return is_there_nfs ? "yes" : "no"

end

###########################

####### VARIABLES #########

###########################

# nodes to be built

nodes = {

"baker": {

ip: "192.168.250.104",

role: 'nfs',

memory: "1024",

disk: "10GB"

},

"sherlock": {

ip: "192.168.250.102",

role: 'master',

memory: "4096",

disk: "10GB"

},

"watson": {

ip: "192.168.250.103",

role: 'slave',

memory: "4096",

disk: "10GB"

}

}

# cluster default gateway ip

gateway_ip = "192.168.250.1"

# metallb network mask to get ips from the pool

metallb_netmask = "192.168.250.112/29"

# master_ip

master_ip = get_master_ip(nodes)

# mount nfs

nfs_active = is_nfs_active(nodes)

nfs_ip = get_nfs_ip(nodes)

nfs_netmask = "192.168.250.0/24"

# shell provisioning

del_default_gateway = "route del default gw 0"

add_gateway_command = "route add default gw #{ gateway_ip }"

###########################

#### VAGRANT CONFIGURE ####

###########################

Vagrant.configure("2") do |config|

# loop through all configured nodes

nodes.each { |name, ndata|

config.vm.define name do |node|

node.vm.box = "ubuntu/xenial64"

node.disksize.size = ndata[:disk]

node.vm.hostname = name

node.vm.network "public_network", ip: ndata[:ip]

node.vm.provision "shell", inline: del_default_gateway

node.vm.provision "shell", inline: add_gateway_command

node.vm.provider "virtualbox" do |vb|

vb.memory = ndata[:memory]

end

node.vm.provision "ansible" do |ansible|

ansible.playbook = "ansible/playbook-#{ ndata[:role] }.yml"

ansible.extra_vars = {

master_ip: master_ip,

metallb_addresses: metallb_netmask,

nfs_active: nfs_active,

nfs_netmask: nfs_netmask,

nfs_ip: nfs_ip

}

end

end

}

end| Make sure master and slave hostnames are different otherwise Kubernetes won’t show the slave machine when listing available nodes. |

Make sure you’ve installed the vagrant-disksize plugin

before building vagrant boxes: vagrant plugin install

vagrant-disksize

|

Ansible provisioning

| For more information about Ansible go to https://www.ansible.com/ |

The Vagrant files are using an Ansible playbook in order to install the system requirements in order Kubernetes to work. Depending on whether the node has been declared as master or slave it will make use of one playbook or the other. Master playbook:

- hosts: all

become: true

vars:

ansible_python_interpreter: /usr/bin/python3

roles:

- base

- kubernetes

- init

- podnet

- metallb

- nfs_common

- nfs_client-

Base libraries

-

Kubernetes related libraries: kubeadm, kubectl…

-

Kubernetes cluster initialization

-

Kubernetes POD network installation

-

Kubernetes MetalLB (Load Balancer) installation

And for the slave nodes:

- hosts: all

become: true

vars:

ansible_python_interpreter: /usr/bin/python3

roles:

- base

- kubernetes

- join

- nfs_common

- nfs_client-

Base libraries

-

Kubernetes related libraries: kubeadm, kubectl…

-

Kubernetes slave node joins master node

Installation

Go to the /kubernetes directory and execute:

vagrant upThe process will prompt you in order to choose which network device will be used as a bridged connection. Once the process has finished you can log in the master vm:

vagrant ssh sherlockAnd make sure both nodes are up and ready:

kubectl get nodes